Solving the ECS Task Definition Update Challenge in CodePipeline Deployments

The ECS Deploy action in CodePipeline updates the service with the Task Definition currently associated with the running service, not the latest one you've registered. Any changes to the Task Definition won't be applied unless you force the service to use the newest Task Definition.

While working with one of our clients recently, we encountered a subtle yet impactful issue with AWS CodePipeline's default Deploy action for ECS services. The problem revolved around the ECS service not using the latest Task Definition during deployments, leading to unexpected behaviors and outdated configurations running in production. In this blog post, we'll dive into the issue, explore why it happens, and present a CloudFormation-based solution to ensure your ECS services always run the latest Task Definitions.

The Problem: ECS Deploy Action Doesn't Use the Latest Task Definition

Our client had a continuous integration and continuous deployment (CI/CD) pipeline set up using AWS CodePipeline, with an ECS Deploy action to update their services. They noticed that even after updating the Task Definition (for example, changing environment variables or resource configurations), the ECS service didn't pick up these changes during deployment. Instead, it continued running with the old Task Definition, leading to confusion and potential system discrepancies.

Why Does This Happen?

By default, the ECS Deploy action in CodePipeline updates the ECS service with the Task Definition currently associated with the running service, not the latest one you've registered. In fact, the CodePipeline documentation highlights this:

The Amazon ECS standard deployment action for CodePipeline creates its own revision of the task definition based on the the revision used by the Amazon ECS service. If you create new revisions for the task definition without updating the Amazon ECS service, the deployment action will ignore those revisions.

This means that any changes to the Task Definition won't be applied unless you manually force the service to use the newest Task Definition. This behavior is not immediately apparent and can lead to deployments that don't reflect your intended updates.

The Solution: Forcing ECS to Use the Latest Task Definition

To address this issue, I suggest a possible solution using a CloudFormation template that ensures the ECS service always uses the latest Task Definition. The key is to create a Lambda function that updates the ECS service with the most recent Task Definition and invoke this function as part of the deployment pipeline.

How Does It Work?

- CloudFormation Template: The entire infrastructure, including the ECS cluster, service, task definition, Lambda function, and CodePipeline, is defined in a CloudFormation template for reproducibility and ease of management.

- CodePipeline Integration: Two deployment pipelines are used. Each is invoked depending on the type of change in the code repository (GitHub), either changes to the application code or the task definition.

- Lambda Function: In the pipeline monitoring Task Definition changes, a Lambda function named

updateECSservicelists all active Task Definitions for a given family, selects the latest one and updates the ECS service to use it.

The Lambda Function Code

The Lambda function is crucial to our solution. Here's the code embedded within the CloudFormation template:

import boto3

import os

import json

import logging

# Set up logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)

# Initialize AWS clients outside the handler for re-use across Lambda invocations

ecs_client = boto3.client('ecs')

codepipeline_client = boto3.client('codepipeline')

def lambda_handler(event, context):

logger.info('Lambda function has started execution.')

# Retrieve environment variables

cluster_name = os.environ.get('CLUSTER_NAME')

service_name = os.environ.get('SERVICE_NAME')

family_prefix_name = os.environ.get('FAMILY_PREFIX_NAME')

logger.info(f'Cluster Name: {cluster_name}')

logger.info(f'Service Name: {service_name}')

logger.info(f'Family Prefix Name: {family_prefix_name}')

# Extract Job ID from the event

try:

job_id = event['CodePipeline.job']['id']

logger.info(f'CodePipeline Job ID: {job_id}')

except KeyError as e:

logger.error('Job ID not found in the event.', exc_info=True)

raise Exception('Job ID not found in the event.') from e

try:

# List active task definitions sorted by revision in descending order

logger.info('Listing task definitions...')

response = ecs_client.list_task_definitions(

familyPrefix=family_prefix_name,

status='ACTIVE',

sort='DESC'

)

task_definitions = response.get('taskDefinitionArns', [])

if not task_definitions:

message = 'No active task definitions found.'

logger.error(message)

raise Exception(message)

# Get the latest task definition ARN

latest_task_definition = task_definitions[0]

logger.info(f'Latest Task Definition ARN: {latest_task_definition}')

# Update ECS service to use the latest task definition

logger.info('Updating ECS service to use the latest task definition...')

update_response = ecs_client.update_service(

cluster=cluster_name,

service=service_name,

taskDefinition=latest_task_definition,

forceNewDeployment=True

)

logger.info('ECS service updated successfully.')

# Serialize the response to make it JSON serializable

update_response_serialized = json.loads(json.dumps(update_response, default=str))

logger.debug(f'Update Response: {update_response_serialized}')

# Notify CodePipeline of a successful execution

logger.info('Notifying CodePipeline of a successful execution...')

codepipeline_client.put_job_success_result(jobId=job_id)

logger.info('CodePipeline notified successfully.')

return {

'statusCode': 200,

'body': json.dumps(update_response_serialized)

}

except Exception as e:

logger.error('An error occurred during the Lambda execution.', exc_info=True)

# Notify CodePipeline of a failed execution

logger.info('Notifying CodePipeline of a failed execution...')

codepipeline_client.put_job_failure_result(

jobId=job_id,

failureDetails={

'message': f'Lambda function failed: {str(e)}',

'type': 'JobFailed',

'externalExecutionId': context.aws_request_id

}

)

logger.info('CodePipeline notified of failure.')

return {

'statusCode': 500,

'body': json.dumps({'error': str(e)})

}

Implementing the Solution

Copy the repository into a new one you will own using the following link: Copy to a new repository from the repository template

Create a Connection to GitHub: Since the pipelines rely on your GitHub repository as the source, you'll need to set up an AWS CodeConnections connection to GitHub.

- Steps to Create the Connection:

- Navigate to AWS CodeConnections:

- Go to the AWS Management Console and open the AWS CodeConnections page.

- Create a New Connection:

- Click on Create connection.

- Select GitHub as the provider type.

- Authorize AWS to Access GitHub:

- Follow the prompts to authenticate with your GitHub account.

- You'll be asked to grant AWS permissions to access your repositories. Ensure you authorize the correct repositories or organizations.

- Retrieve the Connection ARN:

- Once the connection is established, note the Connection ARN. You'll need this value for the

GitHubConnectionArnparameter in the CloudFormation template.

- Once the connection is established, note the Connection ARN. You'll need this value for the

- Navigate to AWS CodeConnections:

Note 1: The connection allows AWS services like CodePipeline to securely interact with your GitHub repository. It enables automated triggering of pipelines based on code changes and fetches the latest code during the build process.

Note 2: AWS CodeConnections is the new name of AWS CodeStar Connections.

Prepare the ECS Container image: Run the following commands to upload the first version of the container image.

aws ecr get-login-password --region <REGION_ID> | docker login --username AWS --password-stdin <ACCOUNT_ID>.dkr.ecr.<REGION_ID>.amazonaws.com

aws ecr create-repository --repository-name demo-ecs-service-image

docker build -t <ACCOUNT_ID>.dkr.ecr.<REGION_ID>.amazonaws.com/demo-ecs-service-image .

docker push <ACCOUNT_ID>.dkr.ecr.<REGION_ID>.amazonaws.com/demo-ecs-service-imageNote: The image's architecture should be x86.

Deploy the Stack: Use AWS CloudFormation to deploy the stack. You can do this via the AWS Management Console, AWS CLI, or AWS SDKs. Using AWS CLI:

aws cloudformation deploy \

--template-file cloudformation-template.yml \

--stack-name your-stack-name \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides \

GitHubConnectionArn=your-connection-arn \

GitHubOwner=your-github-username \

GitHubRepoName=your-repo-name \

GitHubBranch=main \

ECRRepositoryName=demo-ecs-service-image \

ClusterName=demo-ecs-cluster \

ServiceName=demo-ecs-service \

TaskDefinitionFamily=demo-ecs-service-task-definition \

VpcId=your-vpc-id \

SubnetIds=your-subnet-ids-separated-by-commasNote: The deployment can take around 5 minutes.

Monitor the Pipelines: The two CodePipelines will monitor code changes and task definition changes separately, triggering builds and deployments accordingly.

- Pipeline for Code Changes: Detects changes in your application code and Dockerfile, builds a new image, pushes it to ECR, and updates the ECS service.

- Pipeline for Task Definition Changes: Detects changes in your task definition files, registers a new Task Definition, and invokes the Lambda function to update the ECS service.

Note: Both pipelines are triggered automatically when the CloudFormation stack is deployed. The pipeline monitoring task definition changes might fail the first time it is triggered as it relies on an AWS ECS service that may not have been created yet.

Understanding the Pipelines and Build Projects

Trigger Filters and Pipeline Activation

A crucial part of this solution is how we use trigger filters in CodePipeline to decide which pipeline to activate based on the changes made in the GitHub repository. We have two separate pipelines:

- Pipeline for Code Changes: Monitors changes in application code and Dockerfile.

- Pipeline for Task Definition Changes: Monitors changes in the task definition and build specification files.

How Trigger Filters Work:

- Trigger Filters: In each pipeline, we define trigger filters that specify which files or directories, when changed, should start that pipeline.

- Includes: The

Includesparameter lists patterns to match files. When a commit affects these files, the corresponding pipeline is triggered.

The Two CodeBuild Projects Explained

1. CodeBuild Project for Code Changes (CodeBuildProjectOnlyCodeChanges):

- Purpose: Builds the Docker image of your application whenever there's a change in the application code or Dockerfile.

- Actions Performed:

- Build the Docker Image: Compiles your application code and builds a Docker image.

- Push to ECR: Tags and pushes the newly built image to the Amazon ECR repository.

- Generate

imagedefinitions.json: Creates a file that specifies the image to be used by the ECS service, which is used in the deploy stage.

2. CodeBuild Project for Task Definition Changes (CodeBuildProjectOnlyTaskDefChanges):

- Purpose: Registers a new ECS Task Definition whenever there's a change in the task definition file.

- Actions Performed:

- Register Task Definition: Uses the

task-definition.jsonfile to create a new task definition revision. - Prepare for Deployment: Ensures that the ECS service can pick up the new task definition.

- Register Task Definition: Uses the

How the Pipelines Work Together

- Separation of Concerns: By splitting the pipelines, we can independently manage code changes and task definition changes without unnecessary deployments.

- Efficient Deployments: Only the relevant pipeline is triggered based on the changes, saving time and resources.

- Lambda Function Integration: The task definition changes pipeline includes an Invoke action that calls the Lambda function to update the ECS service with the latest task definition.

Testing

Changing the application code

Let's slightly modify the application code packaged within the container and see how it is deployed automatically.

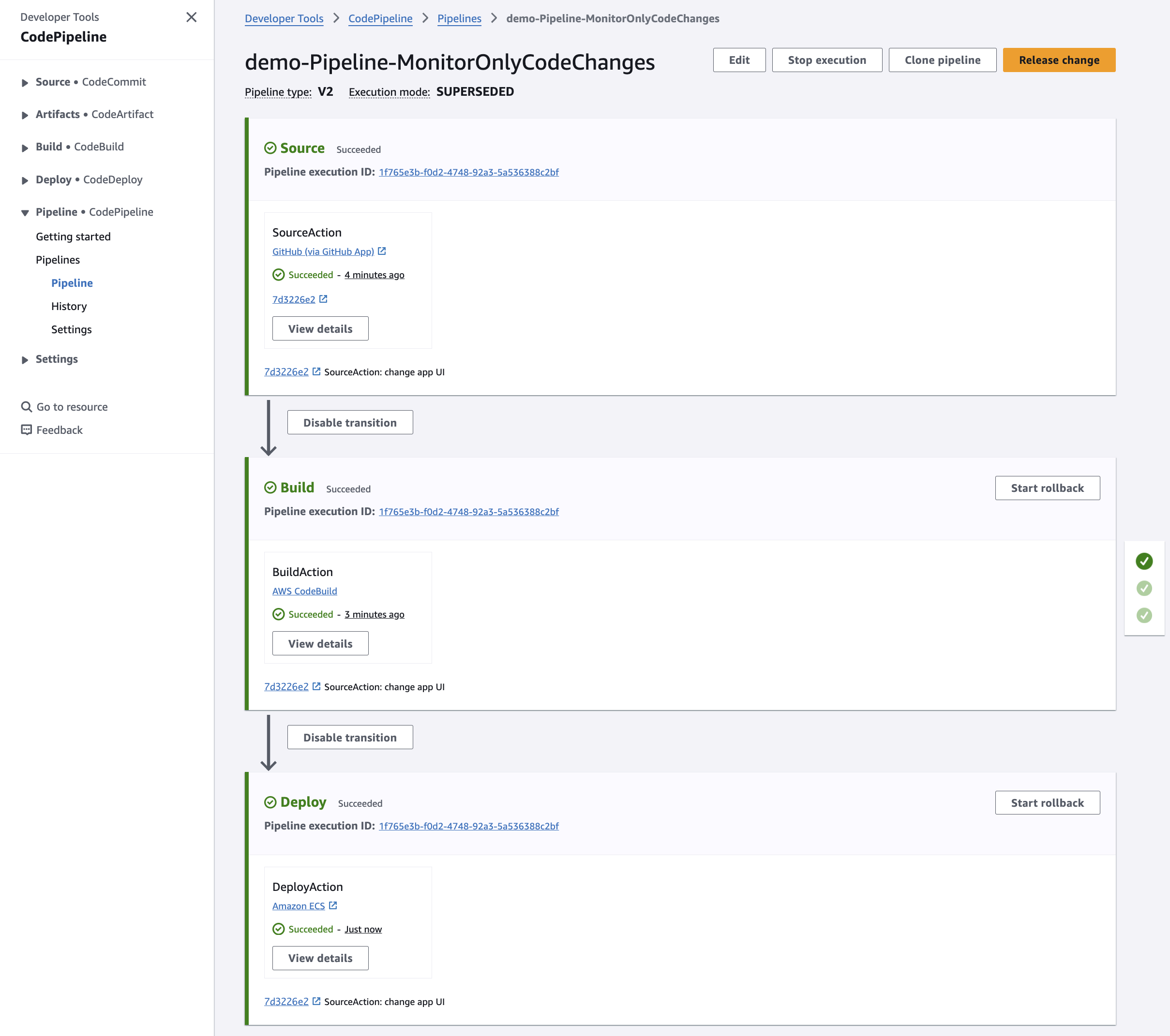

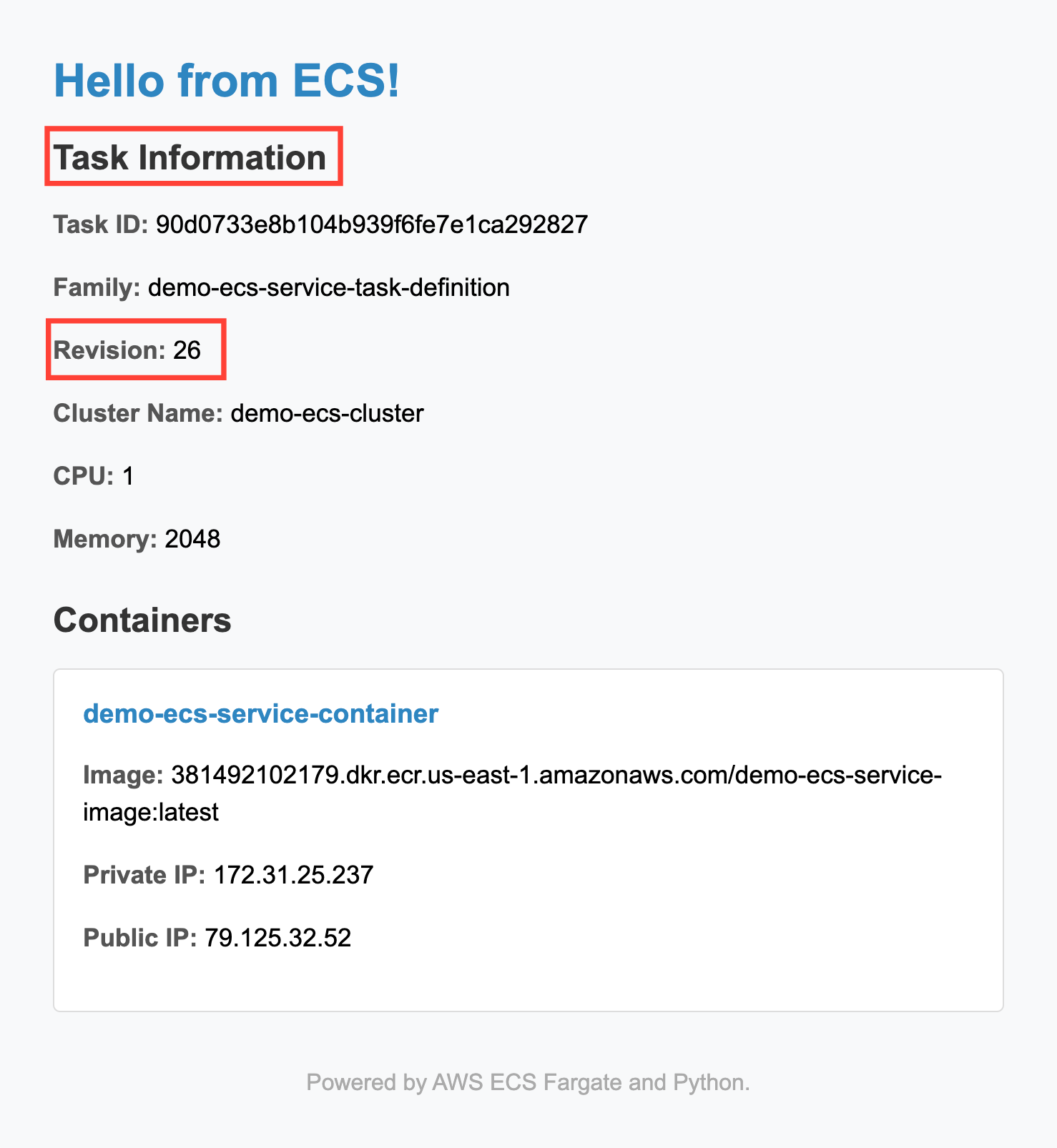

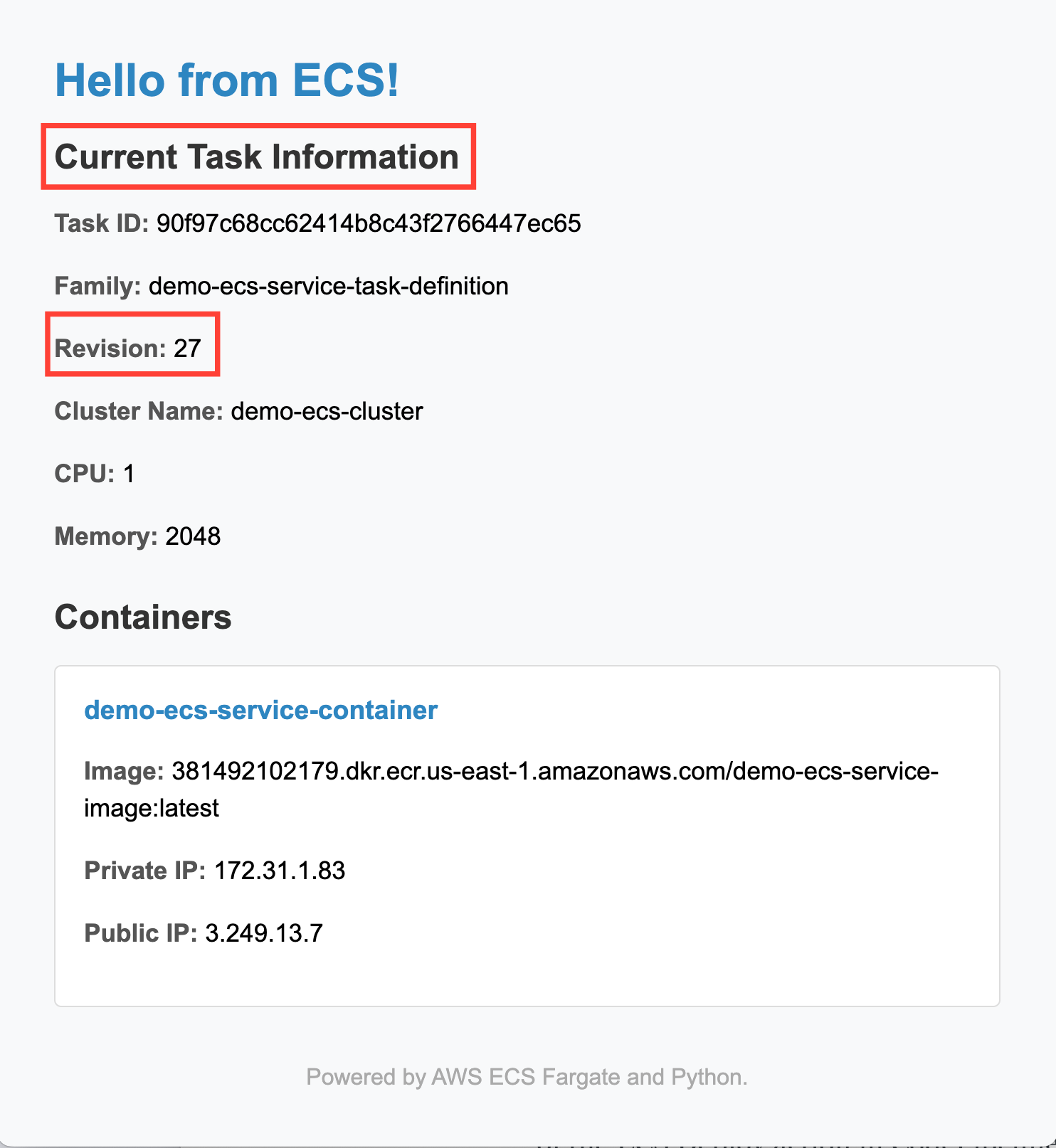

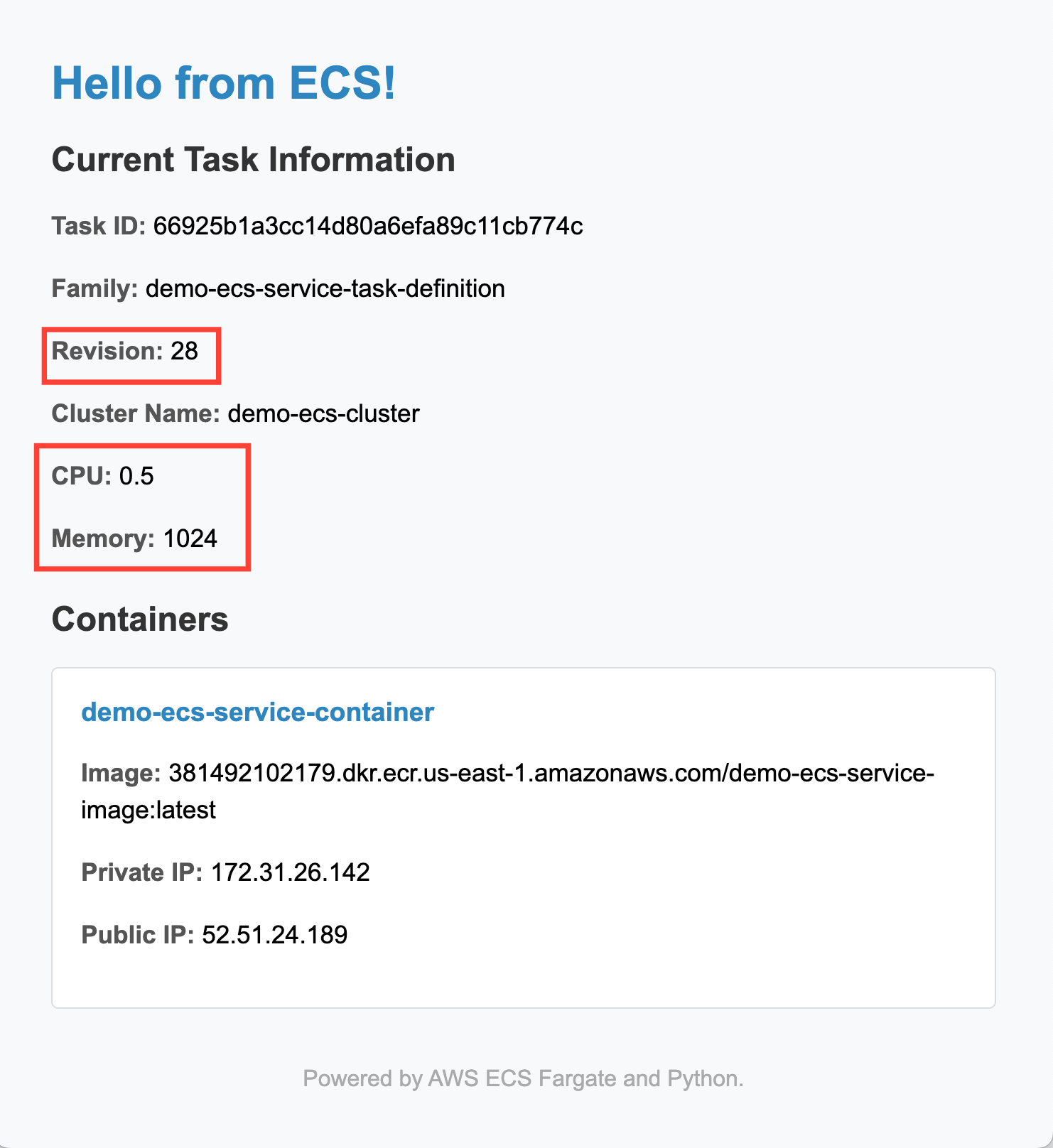

In the index.html file, I change the header "Task Information" to "Current Task Information" and then type git commit -a -m "change HTML code" and git push. This triggers the execution of the pipeline that monitors code changes. After a few minutes, the change is visible by connecting to the ALB's URL (accessible from the CloudFormation outputs section):

Changing the Task Definition

Now, I'll change the CPU and memory settings of the task.

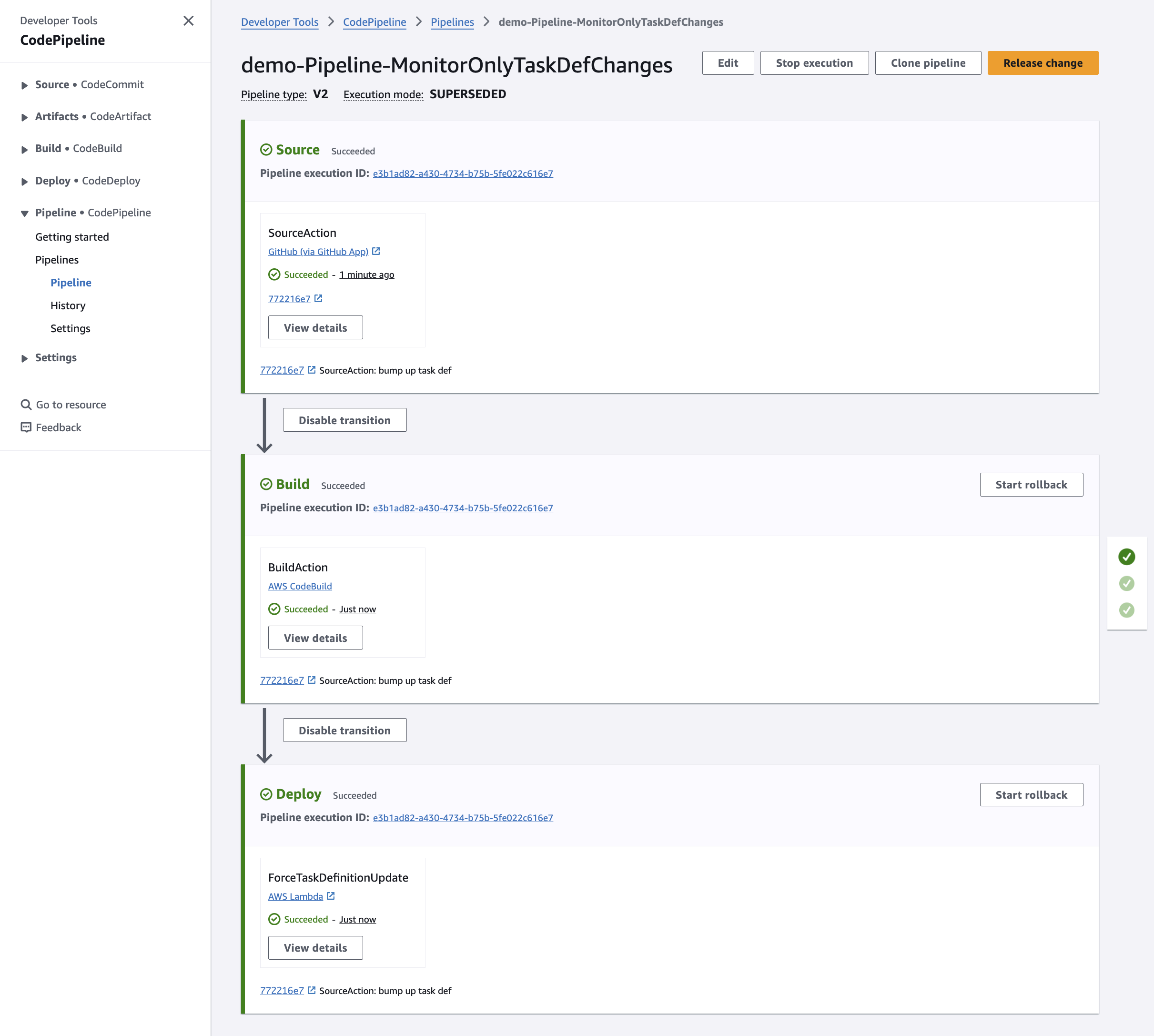

In the task-definition.json file, I'll edit these values from: "cpu": "1024" and "memory": "2048" to "cpu": "512" and "memory": "1024". Then type: git commit -a -m "change task definition" and git push. This triggers the execution of the pipeline that monitors task definition changes:

Note how the revision number changes every time we change either the application code or the task definition.

Conclusion

By integrating a Lambda function into the deployment pipeline, we can force the ECS service to use the latest Task Definition, ensuring that all updates are correctly applied during deployments. This solution addresses the default behavior of the ECS Deploy action in CodePipeline, providing a more predictable and reliable deployment process.

Key Takeaways:

- As mentioned in the CodePipeline documentation, the default ECS Deploy action in CodePipeline may not use the latest Task Definition.

- Incorporating a Lambda function to update the ECS service ensures the latest Task Definition is used.